A Smart Manufacturing IT Architecture Needs Data Distribution, Consolidation and Stream Processing

It is easy to come out of a presentation on “big data” these days thinking that the future of manufacturing information technology (IT) is a combination of three things: connected machines, data stored in a “data lake,” and an analytical engine. However, those closer to manufacturing systems management know that the reality of data storage and processing needs are vastly oversimplified by drawing a big data lake circle in the center of a diagram.

We really need to dig deeper and examine new and older technologies under the lens of the requirements to: distribute data from its source to departmental functions, consolidate views of data for multiple organizational levels, and offer new processing opportunities presented by data streaming technology.

The traditional data landscape across an enterprise is a myriad of islands of information with varied data structures and indexing schemes controlled by each department’s applications for functions including Procurement, Inventory, Production, Quality, Human Resources, Sales, Marketing, and Finances. Rather than trying to create a single mega system to manage the enterprise–-one designed from the ground up with a consistent and integrated data model–-organizations need ways to integrate, exchange and manage data across the departmental functions.

There are different methods and technologies available to perform data distribution, processing and consolidation tasks including:

- Enterprise Application Integration (EAI)

- Extract, Transform and Load (ETL)

- Enterprise Information Integration (EII)

- Data Stream Processing and Streaming Analytics

These techniques should be viewed as complementary with different functions in an overall IT technology stack. In fact, many vendors are combining multiple of these technologies into unified platforms in recognition of how they complement each other in different applications.

Enterprise Application Integration (EAI) - Application-to-application integration via messaged-based, transaction oriented, brokering and data transformation. Focuses on integrating business-level processes and reacting to events that occur during those business processes with transaction data messages for handoff to other business processes or systems. EAI integration mapping needs to be updated when applications change their integration interfaces. It can have simpler results than other methods for the end user because data is accessed via their main departmental application and integration is handled in the background by the IT department. Hub and spoke methods using middleware like Enterprise Service Bus (ESB) products facilitate message brokering between multiple applications.

Extract, Transform and Load (ETL) – Set-oriented point-in-time transformation of data for migration, consolidation and data warehousing. It can process very large amounts of data during non-peak use times and deliver data optimized for reporting, while freeing up transactional databases from the computational burden of complex queries. ETL scripts need to be updated when applications change their query web services or transactional data structures.

Enterprise Information Integration (EII) – Transparent data access and transformation layer providing a virtual consolidated view of data across multiple enterprise data sources. In other words, EII provides federated data queries across multiple data sources via one meta model. EII models can be placed on top of data warehouse models and join structured and unstructured data. It can provide real-time read and write access and manage data placement for performance, accuracy and availability. EII can be hard to implement when key fields and data types do not match across source data systems. EII can impact network bandwidth and have high demand on source systems during peak hours. Caching schemes improve performance by extracting data out of data source systems for reuse by multiple EII queries. The Hadoop toolkit is an example of a technology stack that enables EII techniques.

EII techniques are usually leveraged by data scientists for ad-hoc analysis while ETL techniques turn data into simpler structures for everyday reporting needs of users that don’t need to understand the complicated data structures of source systems.

Data Stream Processing and Streaming Analytics - In contrast to the traditional database model where data is stored, indexed and subsequently queried, stream processing takes the inbound data while it is in-flight , in real-time through an EAI process, and is able to look for alert conditions and patterns in the data stream or in-memory data aggregation—the “real-time data mart.”

There are some evolving combinations of the above techniques and technology stacks which use data stream processing techniques as a pre-processor during the extract phase of an ETL process before data ends up transformed and stored at the data warehouse.

Data in the Smart Manufacturing IT Framework

Evolving Smart Manufacturing systems and applications leverage these different data handling techniques to improve real-time analysis where needed, as well as provide improved availability of structured and unstructured data for different types of reporting and analysis where real-time data is not needed or even desired. For example, the weekly review by the management team of Key Performance Indicators (KPIs) for the organization should be based on data mined at a fixed time—not data mined real-time at different random times yielding different results for every query. We don’t want each manager showing up to the weekly meeting with a different set of data in their hands. In contrast, we want to know of a production machine about to go down as soon as possible especially if it will stop the production line.

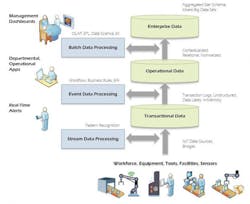

The figure below shows an example of how we could put all these techniques to work together in an IT architecture for the enterprise connecting different types of data for different types of data processing applications. The reality for this picture at any one organization might have more additional parallel paths but this simplified view illustrates the general idea of how it can all work together.

Example of Layered Data Processing in a Smart Manufacturing IT Architecture

About the Author

Conrad Leiva

VP Product Strategy and Alliances

Based in the Los Angeles area, Conrad Leiva is vice president of ecosystem and workforce development at CESMII – the Smart Manufacturing Institute. He is accelerating Smart Manufacturing adoption through partners and education programs. A recognized industry authority, he engages business leaders, educators and practitioners to share the How and Why of leveraging information technology in manufacturing. Conrad has over 30 years of experience in aerospace manufacturing and 10 years working on Smart Manufacturing practices with industry leaders at MESA International.